On Sunday, August 31, 2014, I did a presentation on the intersection of technology and poetry at art|DBF, an art-oriented segment of the Decatur Book Festival. The presentation was the culmination of several months of coding to develop a system that allowed a poet and an audience to create an interactive soundscape.

Why did I do this?

Most people, when they think of poetry, they think of it as this fundamentally human, often life-affirming human activity.

Most people, when they think of technology, think of it as this inhumane, if not inhuman, often soul-crushing process.

This is a false dichotomy, of course. Poetry and technology are both artifacts of what humans do. They are both profoundly human acts.

From the campfire to the cathedral, from the crystal AM radio to the liquid crystal display, our technology has affected what form poetry takes, who creates it, who listens to it, where it is experienced, and how it is distributed.

My intent was to build a demonstration of one possible way to enhance the the experience of poetry for both poet and audience.

How did it work?

I built a web based audio application that controlled sounds with smartphones.

The phones accessed a web server running on my laptop. The pages for the audience could read through the poems being performed and manipulate sounds using one of three instruments.

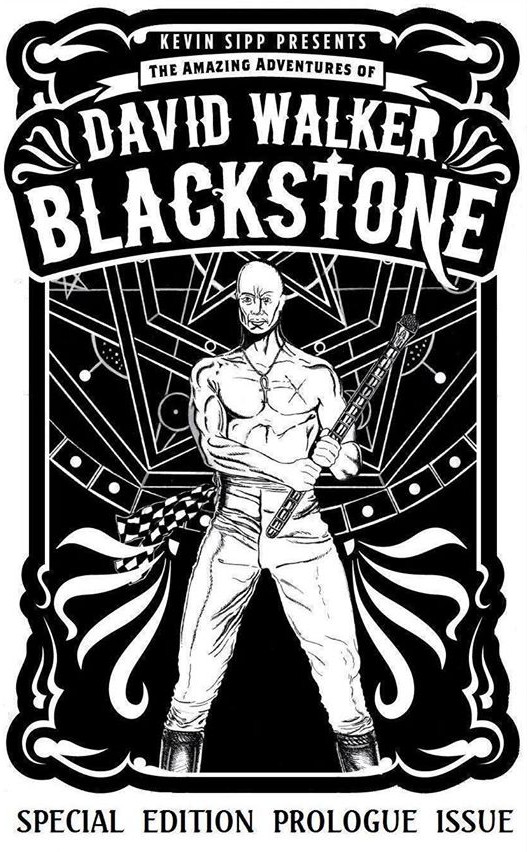

Audience UI

The audience accesses a website via smartphone. The site offers a view of the current poem, a dropdown selection of poems, and links to one of three musical interfaces, the first of which displays by default.

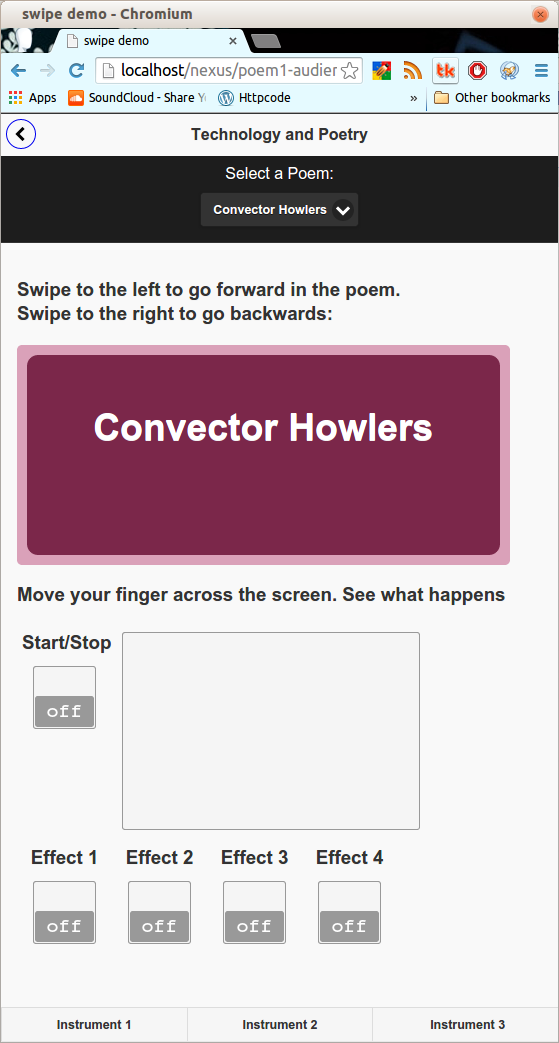

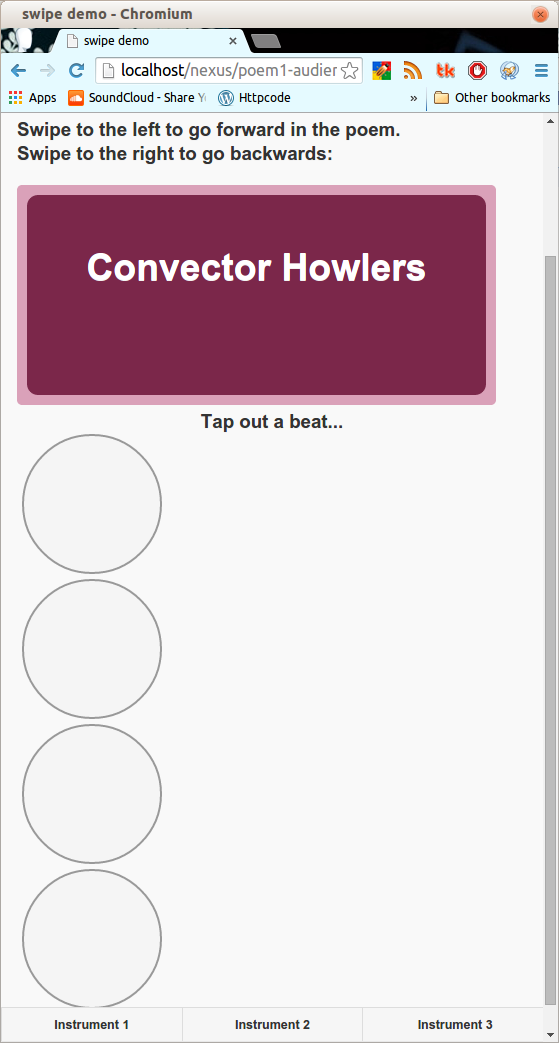

The three interfaces do the following things:

- Instrument 1: create a rain stick-like sound with different effects based moving a point within an small window;

- Instrument 2: a set of four percussion pads;

- Instrument 3: a text area that creates sounds for each word typed.

Poet UI

The interface for the poet has six options – unfortunately only four worked at the time of performance, and only three worked without issues.

- Effect 1: pitch follower creating audio effect a fifth higher than detected frequency

- Effect 2: pitch follower creating audio effect a seventh higher than detected frequency

- Effect 3: Multicomb filter

- Effect 4: Spectacle filter

- Effect 5: Hypnodrone – drone effect kicked off by detected amplitude

- Effect 6: Stutter – warbling bass line using sine oscillator. Originally intended to create a glitch effect.

The speaker had a separate interface for adding vocal effects and a background beat.

The web pages sent messages to a set of ChucK scripts running on my laptop. The scripts generated the sounds and altered the vocals as well as recorded the presentation.

How did it go?

The presentation itself was well-received. It was in the tent for Eyedrum, an Atlanta-based, non-profit organization developing contemporary art, music and new media in its gallery space.

I did my presentation outside with a set of powered PC speakers attached to the laptop. Later, I borrowed a PA and mixer from my friend and fellow poet Kevin Sipp. By the way, check out his debut graphic novel, The Amazing Adventures of David Walker Blackstone:

The laptop was attached to a wireless router that passersby could use to connect to the website. Everyone was able to connect and interact with the site. There were some glitches – which I’ll talk about later – but for the most part, people seemed intrigued by the possible uses of mobile and web technology for poetic performances.

A couple of components either did not perform as expected or did not work at all. Of the audience-specific pages, Instrument 3 did not play or was at too low a volume to be heard over the ambient sounds of the festival. There were also some issues with switching between poems.

The poet-specific pages had issues with two of the six effects: “Multicomb” and “Spectacle”. The multicomb filter had a problem with feedback and was too loud. The spectacle effect didn’t work at all. In addition, the audio started suffering from latency issues. The recording of the first twenty minutes of the presentation started suffering from unintended glitching and was pretty much ruined. The recording of the last fifteen minutes was a little better (I stopped the recording to switch to Kevin’s PA setup), but suffered from the same issue not long into the presentation.

Conclusion

Overall, I think the presentation was well-received, and people were intrigued by what they heard. The issues with the setup became clear when I reviewed the recordings. There’s definitely room for improvement, and I will definitely build upon this design for future performances.

So good, bad, or ugly, I’m posting both recordings (Part 1 and Part 2) and the code for all to see.

Despite the issues, I consider the project a success. This is a prototype, so I expected some problems. Luckily, none of the problems were catastrophic. There were lots of bloops and bleeps, but nothing went “boom”. It would only have been a failure if I had learned nothing from the experience.

Until next time, check out the code, play with, let me know if you use it or modify it.

Comments closed